Validating NLP data and models - Nir Hutnik

Abstract:

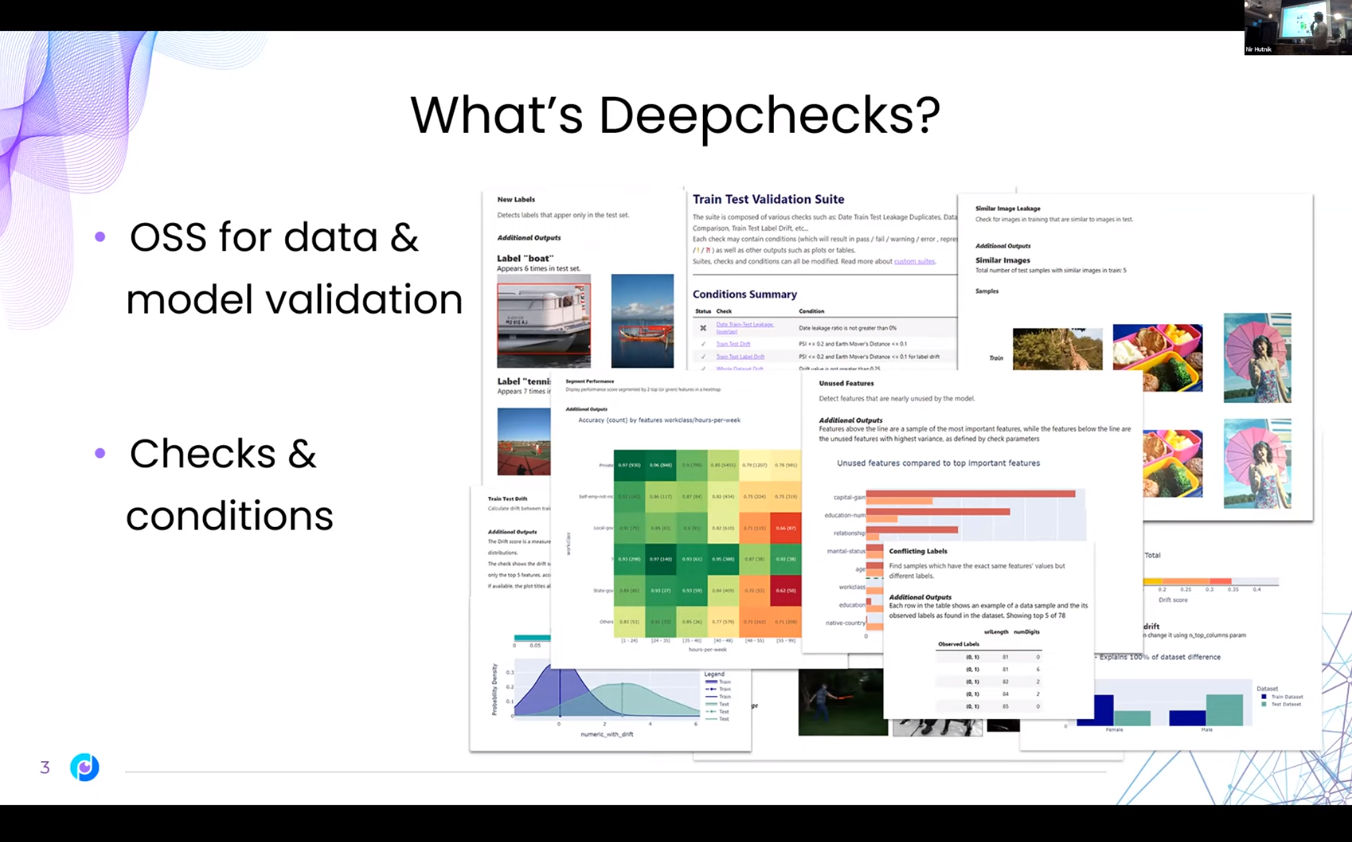

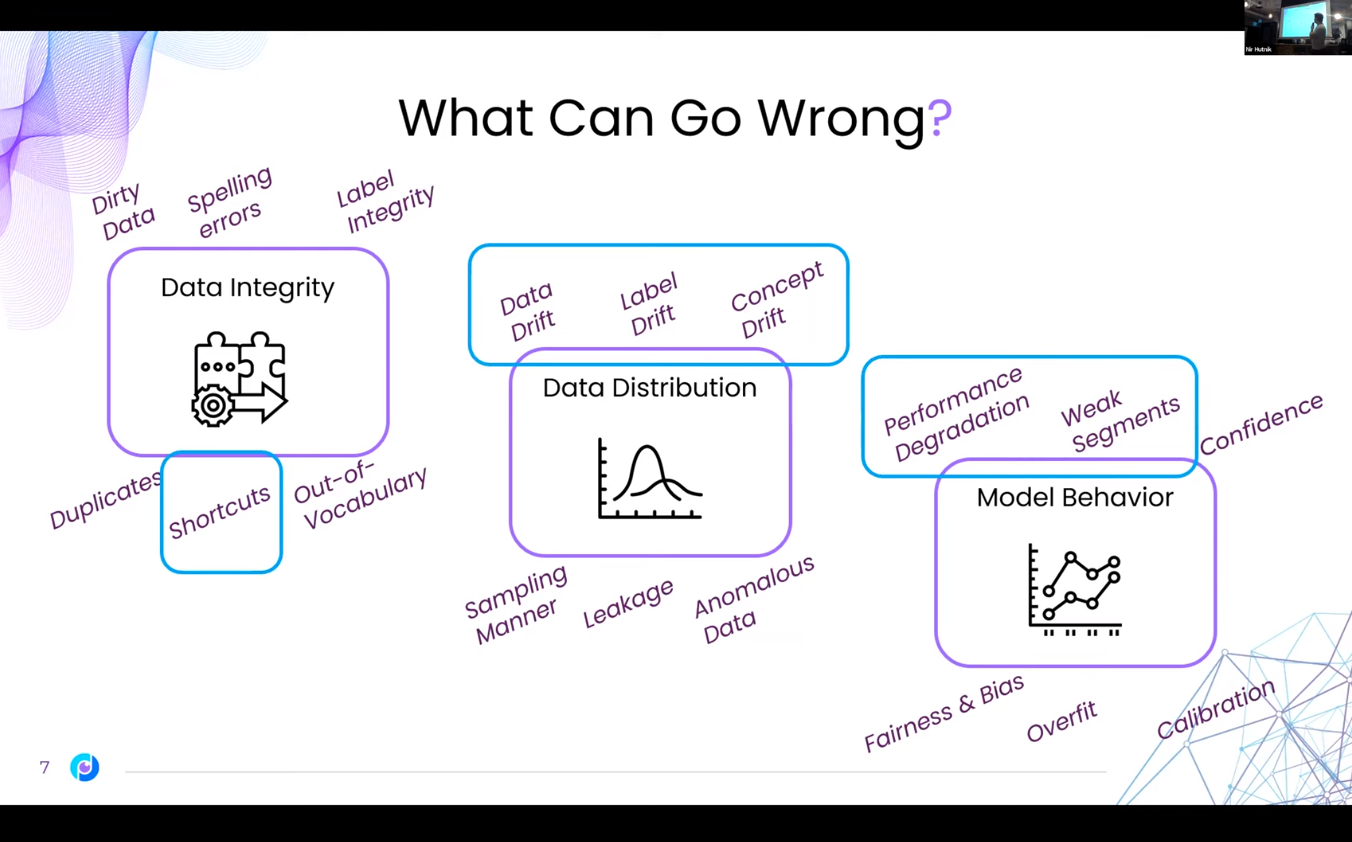

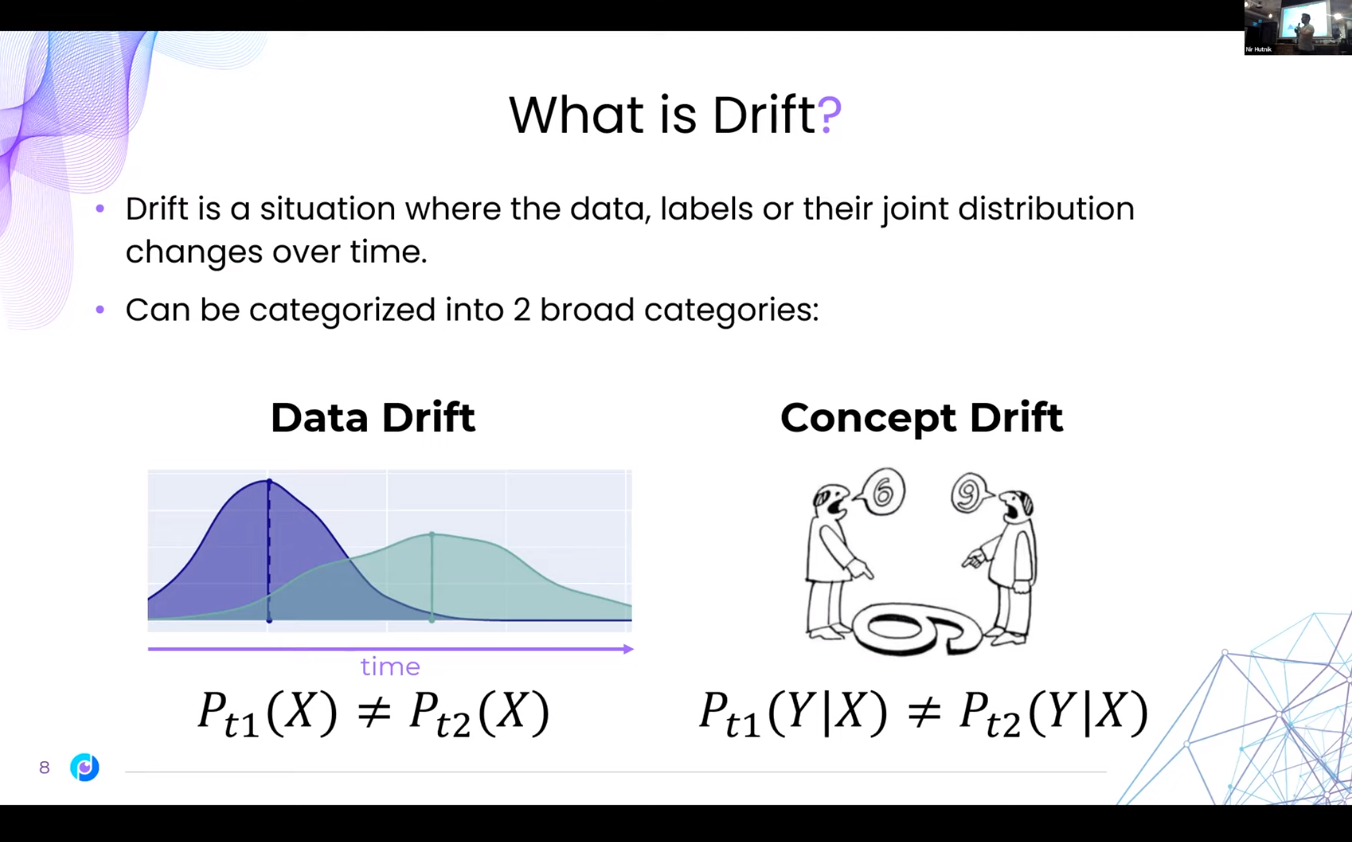

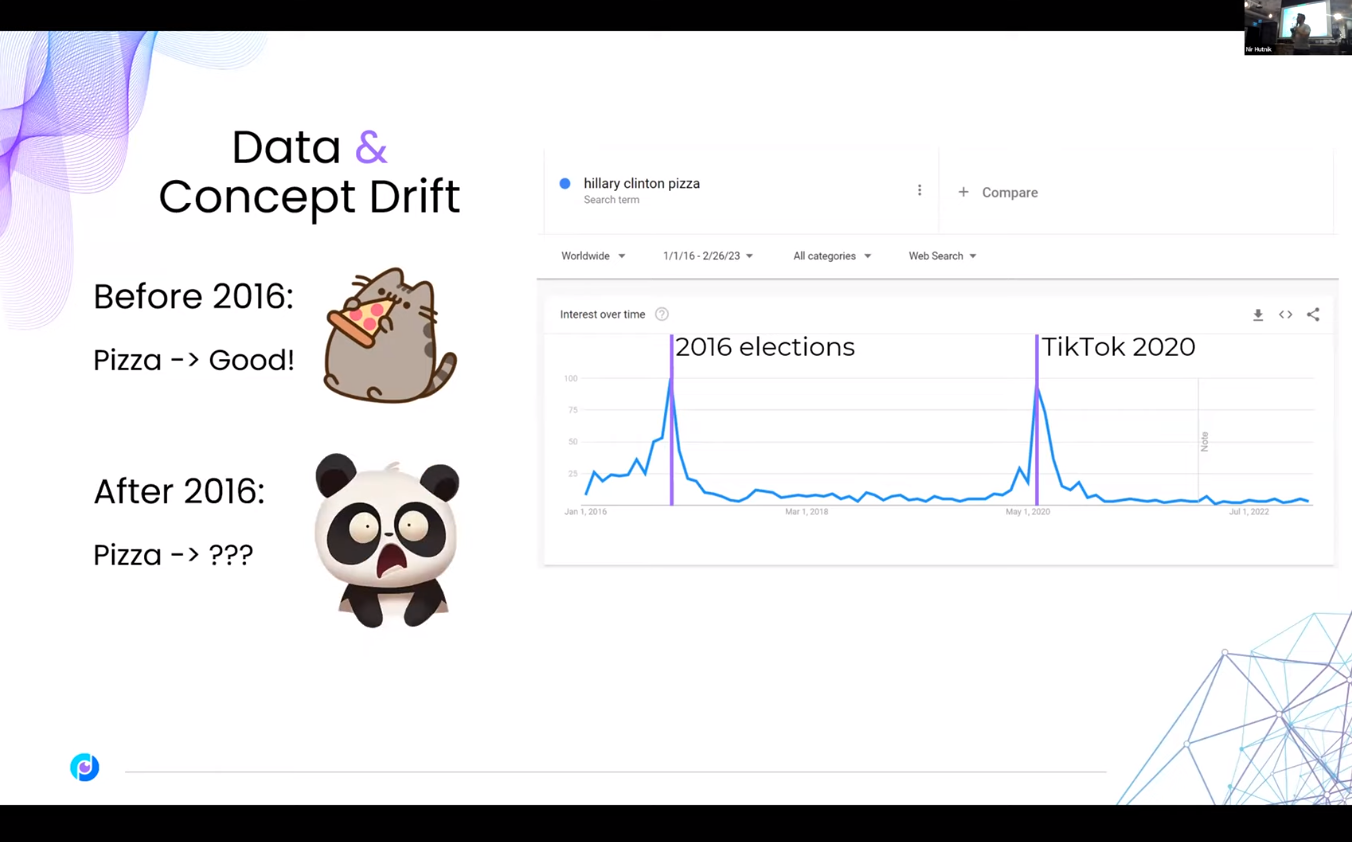

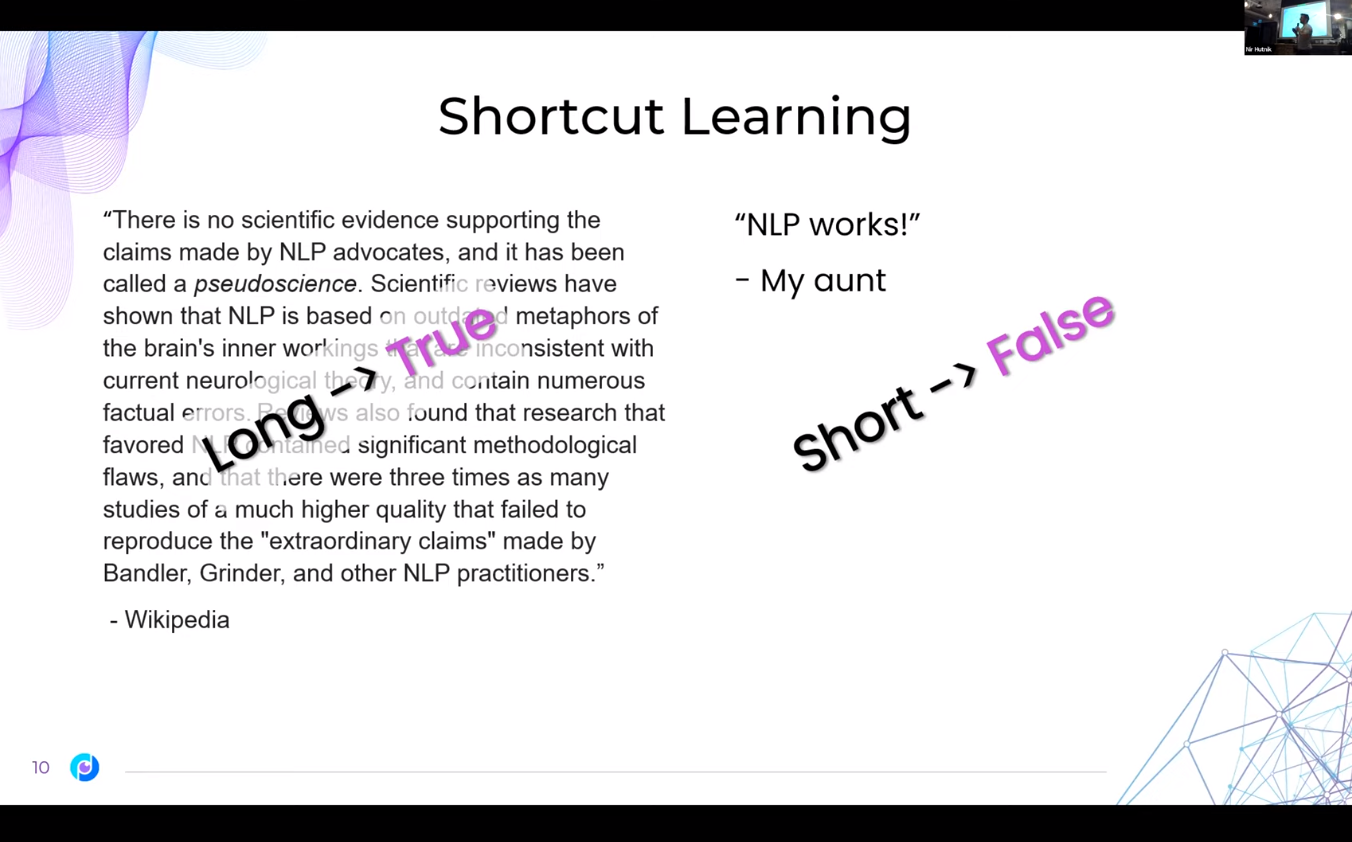

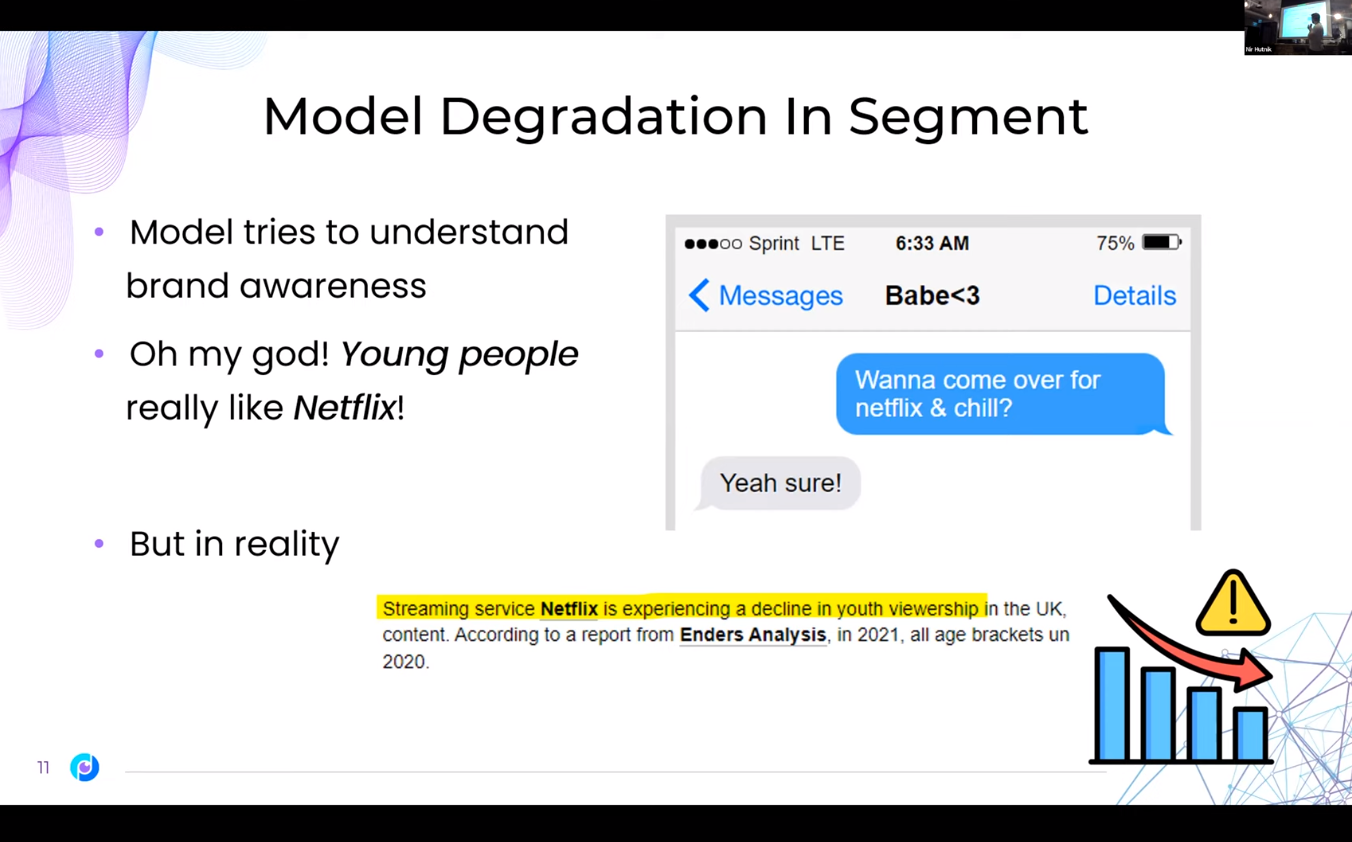

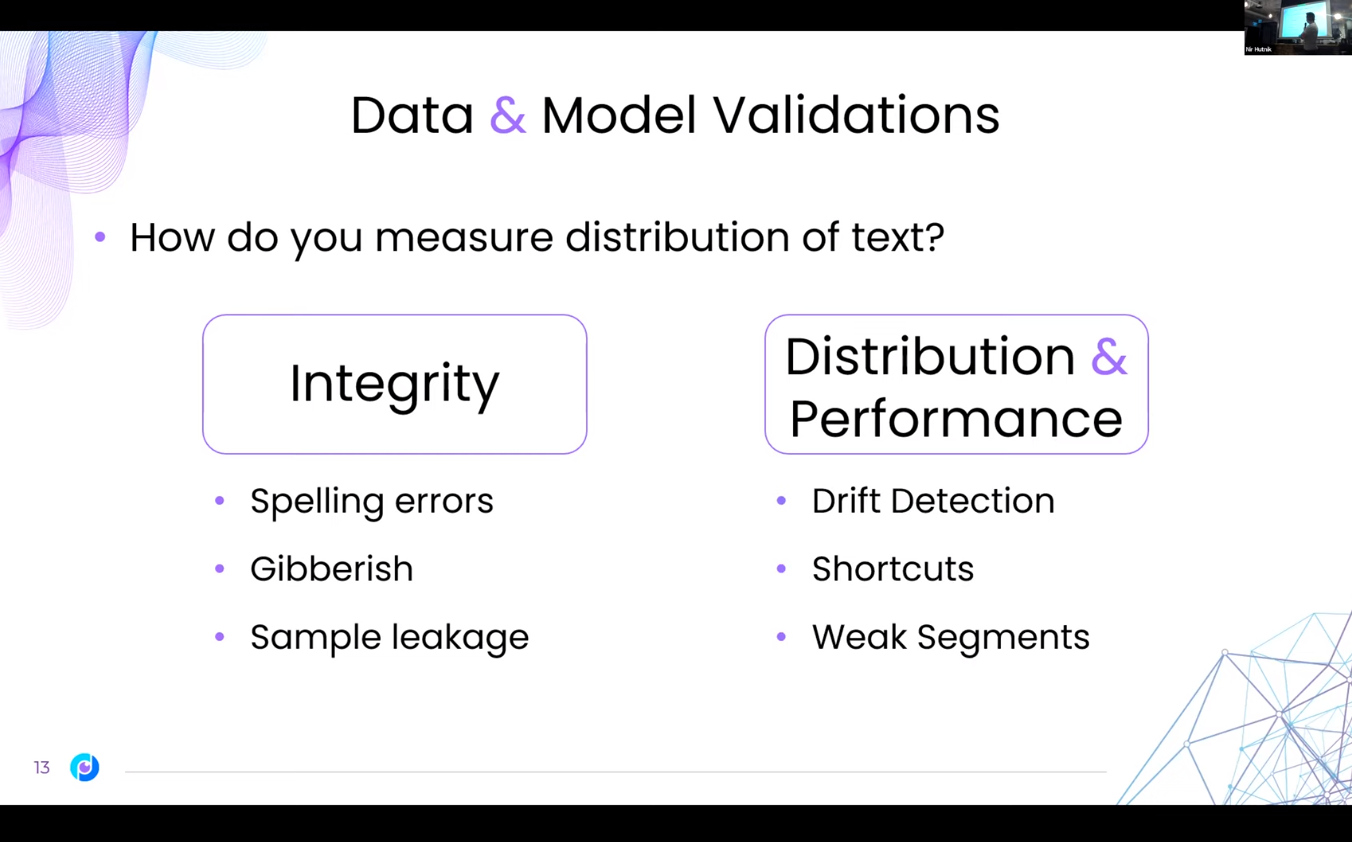

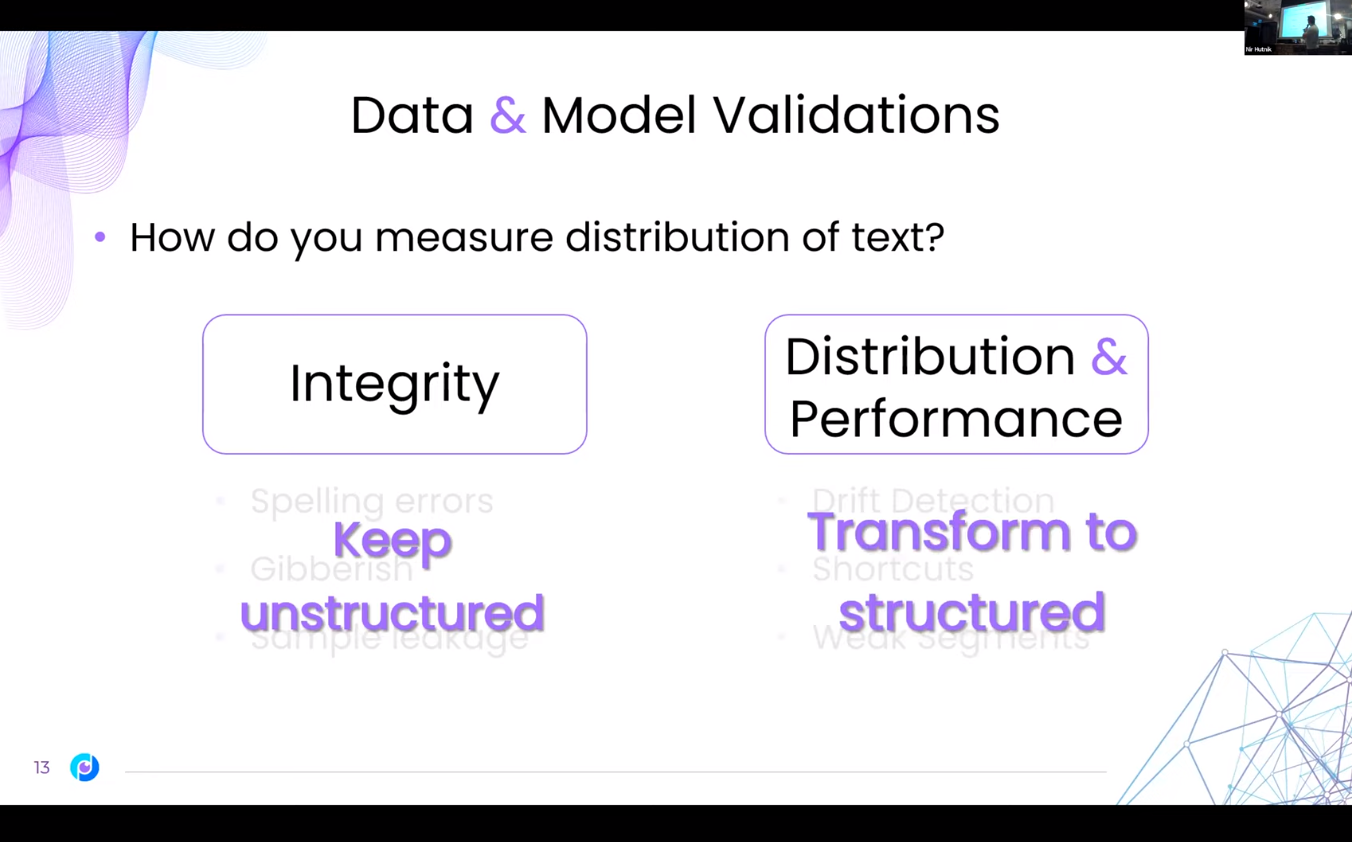

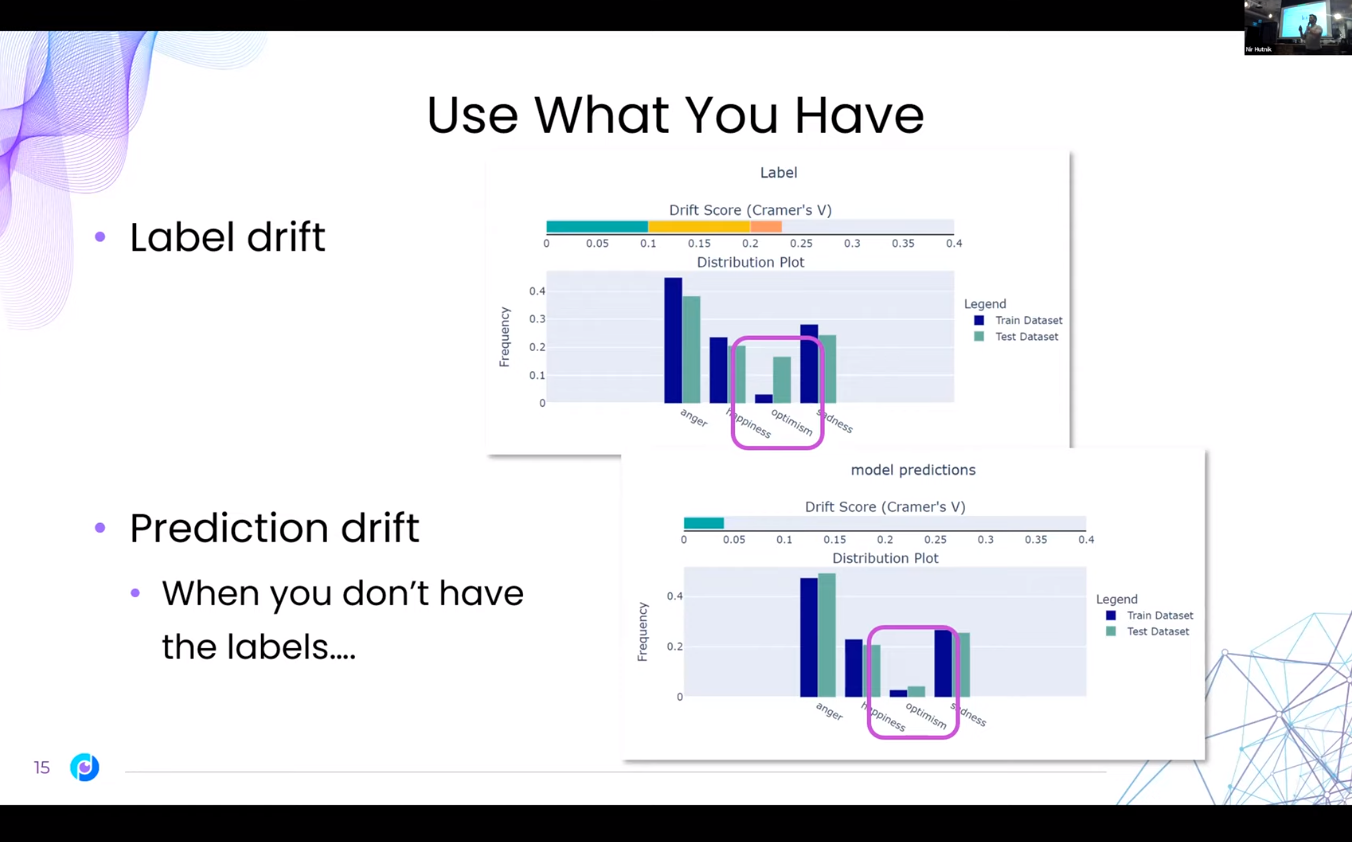

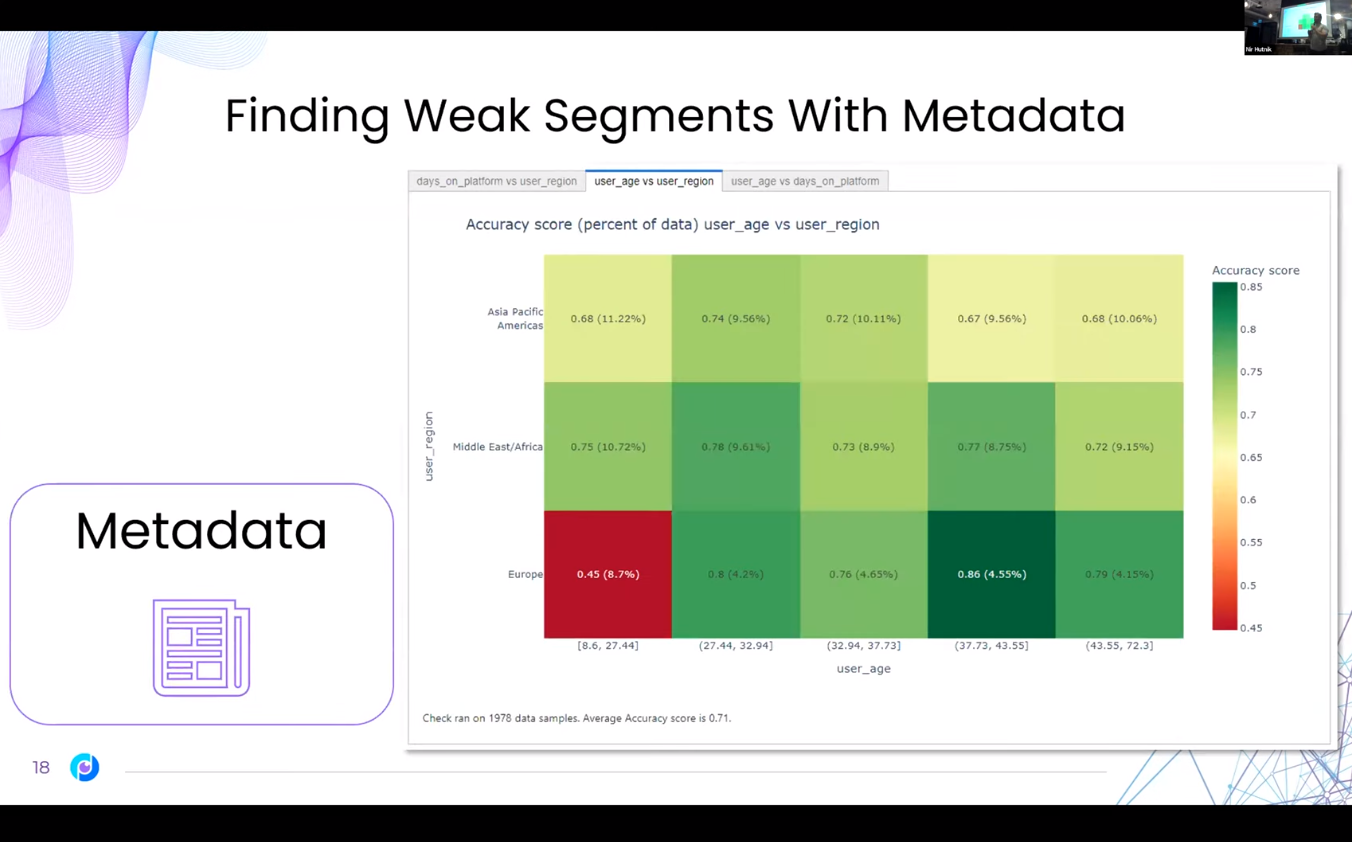

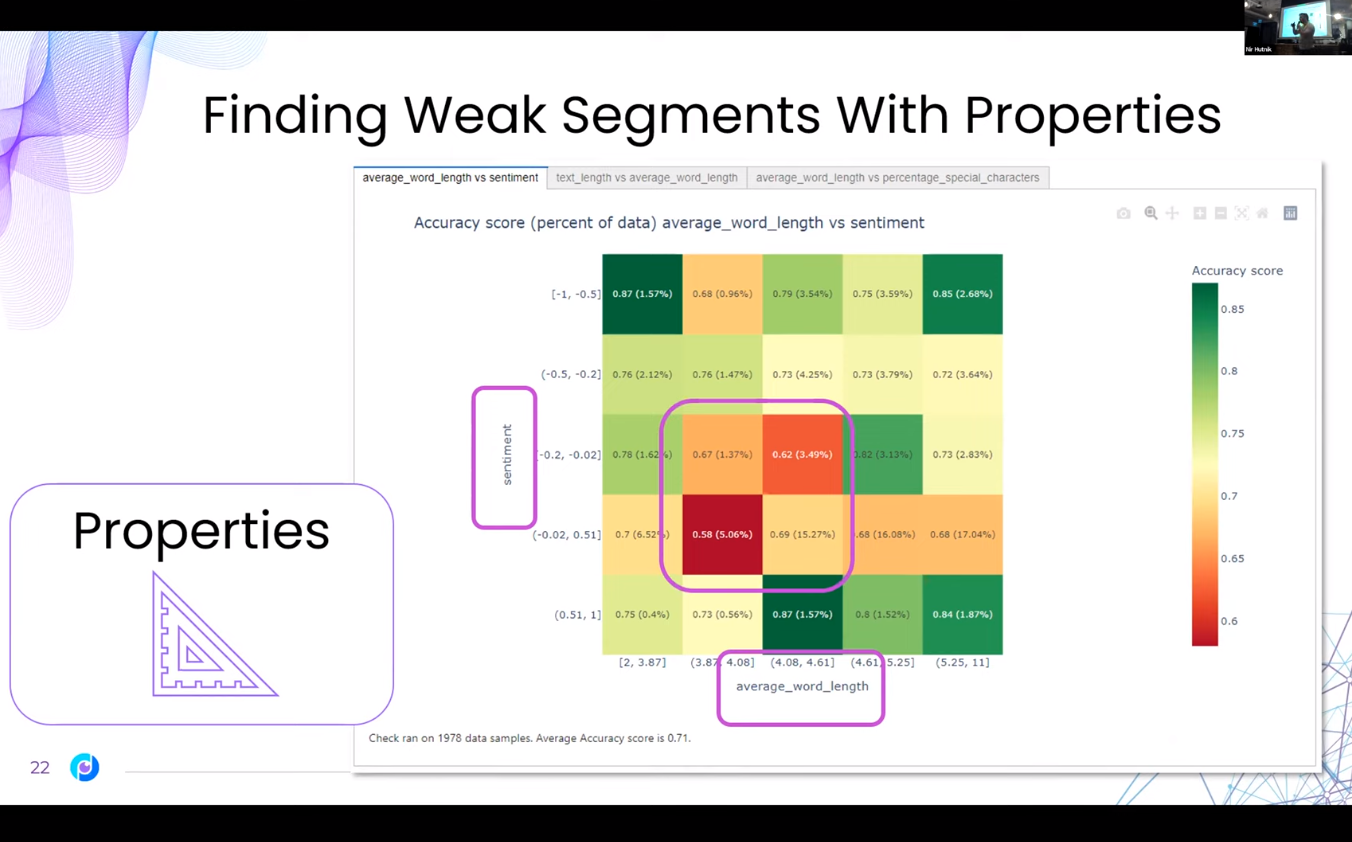

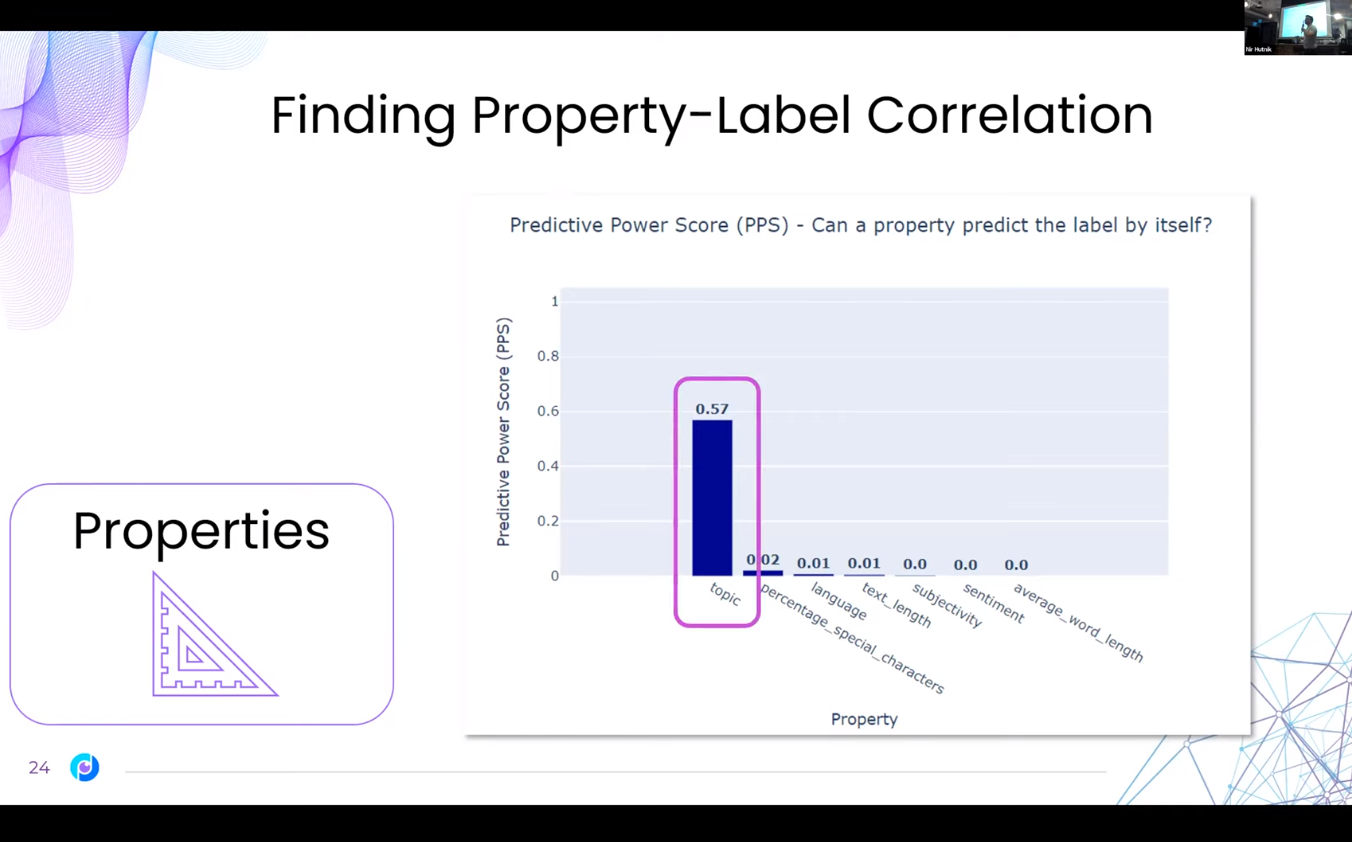

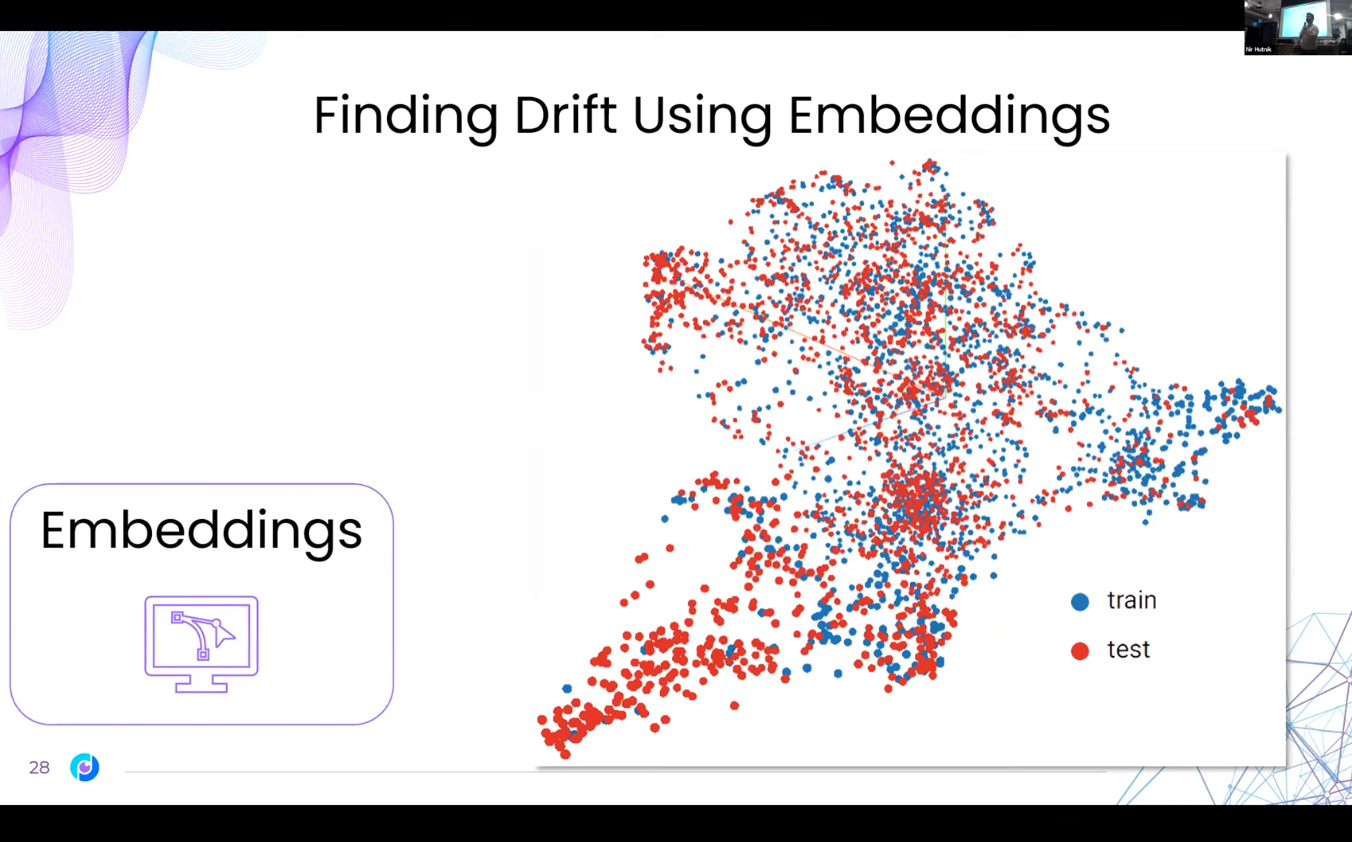

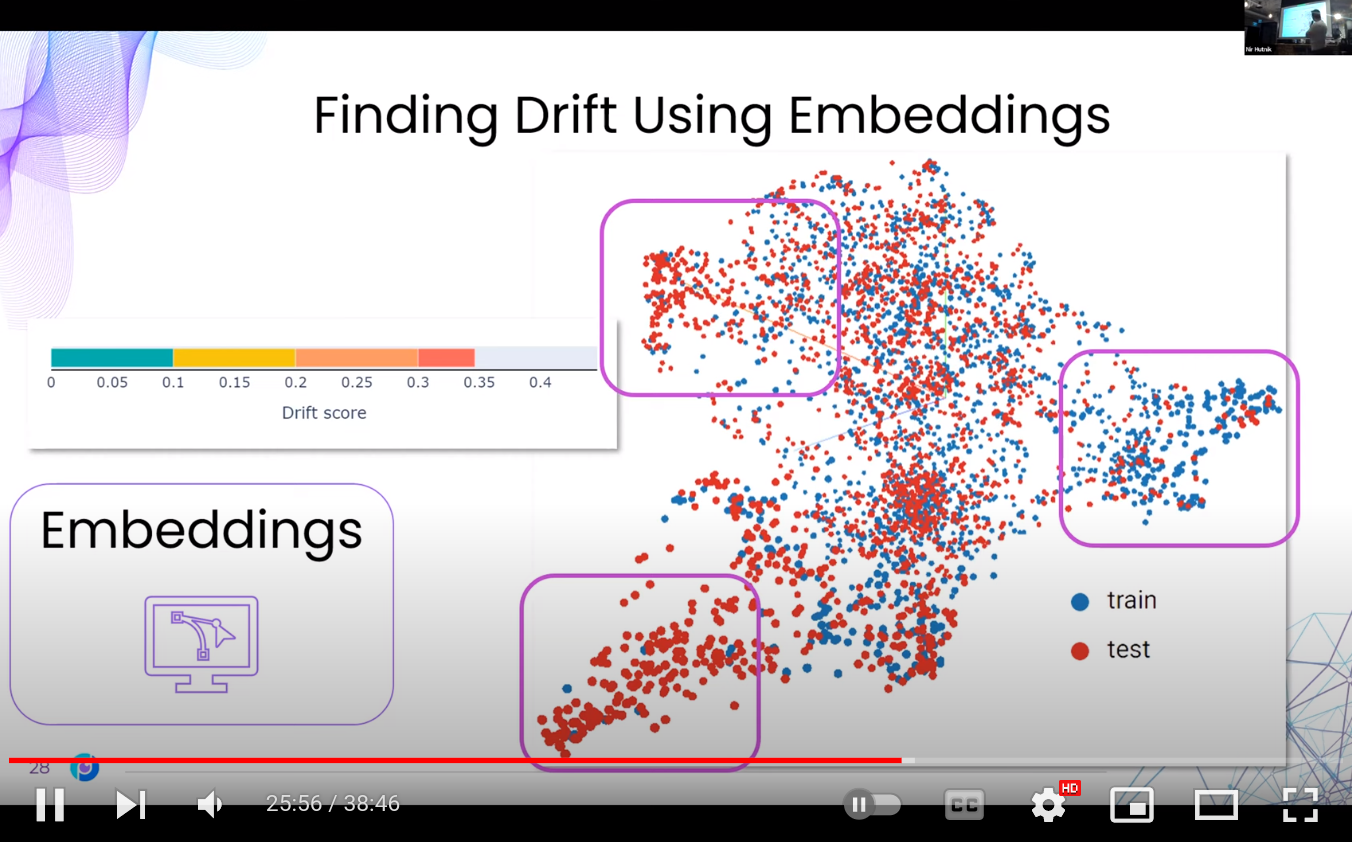

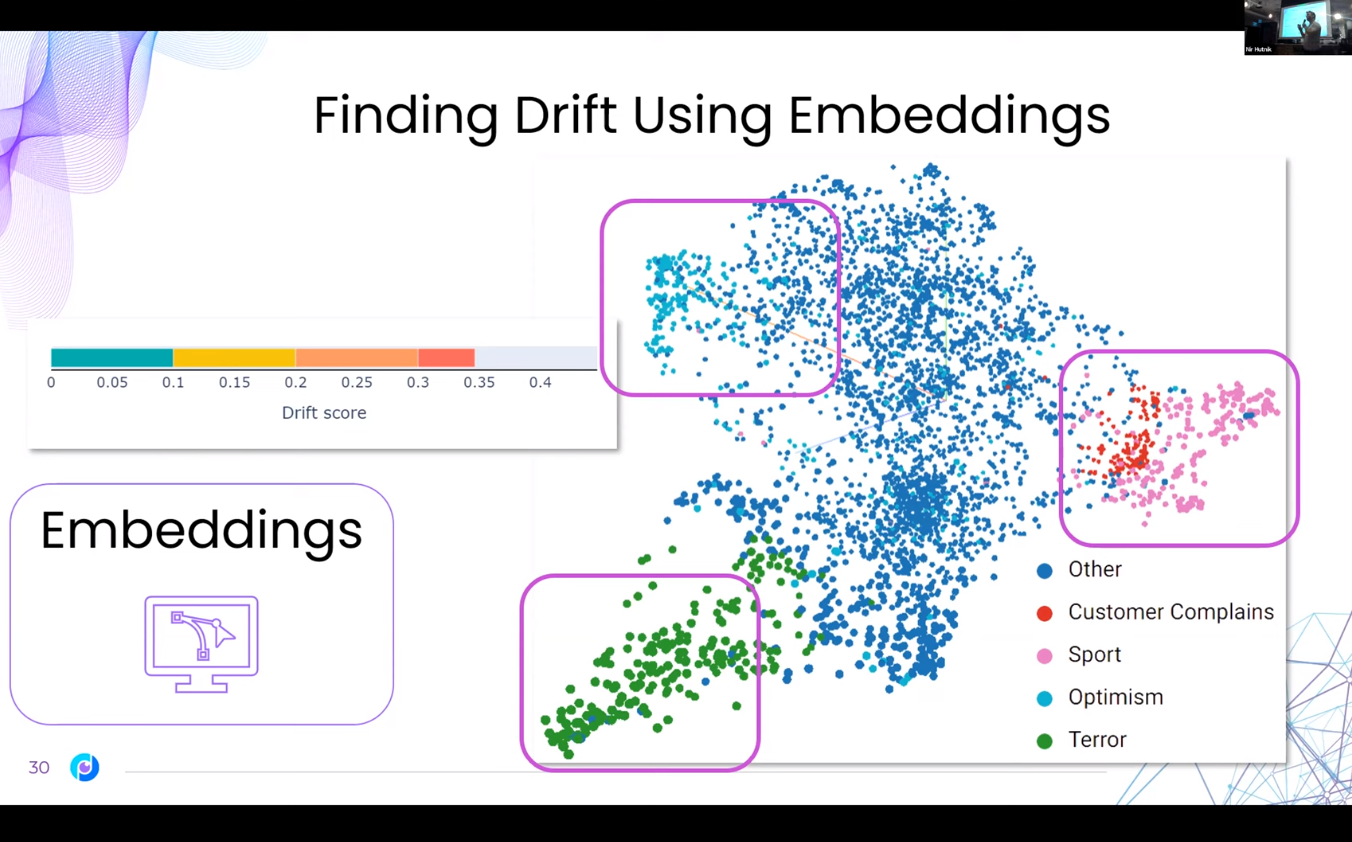

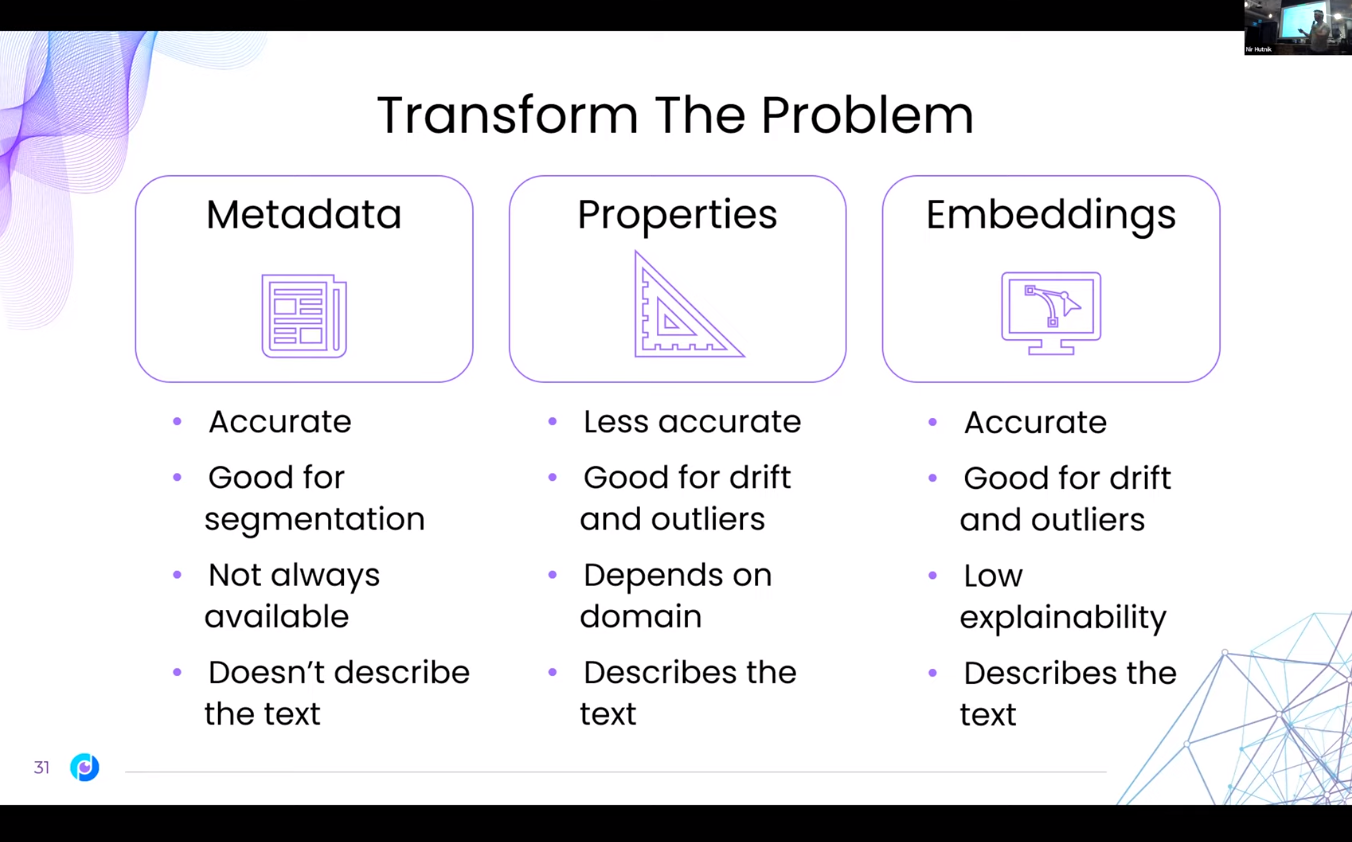

NLP data, and unstructured data in general, is very hard to validate. Validating NLP data is a real challenge, as actions such as statistical analysis and segmentation, which are pretty straightforward on structured data, are not so easy to undertake. In this talk, we will look at common issues in NLP data and models, such as data and prediction drift, sample outliers and error analysis, discuss the ways they can impact our model performance, and show how we can detect these issues using the deepchecks open source testing package.

Speaker

Video

Slides

Citation

BibTeX citation:

@online{bochman2023,

author = {Bochman, Oren},

title = {Validating {NLP} Data and Models},

date = {2023-02-28},

url = {https://orenbochman.github.io/posts/2023/2023-02-28-NLP.IL-Booking.com/NLP-IL-Booking Validating NLP.html},

langid = {en}

}

For attribution, please cite this work as:

Bochman, Oren. 2023. “Validating NLP Data and Models.”

February 28, 2023. https://orenbochman.github.io/posts/2023/2023-02-28-NLP.IL-Booking.com/NLP-IL-Booking

Validating NLP.html.